This is a rapidly changing field and there have been new updates. Please see Long-Distance vMotion: Updates for the latest changes.

This is the second part in a multi-part series on the topic of Long-Distance vMotion. Part 1 introduced us to disaster recovery as practiced today and laid the foundation to build upon.

When building out Long Distance vMotion (LDVM), we still need to focus on the same components we focused upon building out disaster recovery. We will take the leap from our recovery time taking 5 minutes to a continuous non-disruptive operation. We’ll need to change our network from two different subnets in two different datacenters, to one stretched subnet. We’ll need to take our mirrored storage and create what I call a single shared storage image. Last, we’ll get rid of Site Recovery Manager (SRM) and replace it with a VMware vSphere split cluster.

There will be some rules we’ll need to adhere to – limits – to make this all work. For the Hypervisor, we’ll use vSphere 4 or later. VMware vSphere requires a minimum bandwidth of 622 Mbps (OC12), and additionally asks us to maintain a 5 ms round-trip time (RTT) latency. Our storage volumes or LUNs will need to be able to be open for read-write access in both datacenters simultaneously, using FC, iSCSI or NFS. We’ll also need to have enough spare bandwidth to achieve continuous mirroring with low latency.

First we’ll address the network.

Building The Stretched Subnet

When Cisco came out with the Nexus 7000, one of the most compelling features they introduced was Overlay Transport Virtualization (OTV). With OTV, we can easily extend a subnet between two datacenters while achieving spanning tree isolation, network loop protection and broadcast storm avoidance. There are other technologies that can also stretch a subnet between datacenters, but with OTV there aren’t many complex tunnels to setup and maintain.

Cisco’s OTV routes layer 2 over layer 3 without having to change your network design. IP is encapsulated over the link. You can have unlimited distance, no bandwidth requirements and you can dynamically add additional sites without having to change the network design of the existing ones. Without going too deep into the technical details of the technology, it makes stretching the subnet between the two sites not take a rocket scientist to achieve, and its simplicity makes ongoing administration simpler to maintain.

Today, OTV is only available on the Nexus 7000 and has to run in its own Virtual Device Context (VDC), but Cisco plans to release it on other platforms in the future.

What About Storage vMotion?

Once you have the subnet stretched between the two sites, you can use traditional vSphere vMotion coupled with storage vMotion. We can go the maximum distance: 5 ms RTT is a vSphere limit, which equates to roughly 400 km (250 mi) circuit distance. With traditional storage vMotion, we can go the full 400 km distance and go between dissimilar storage hardware at each site. We can use any protocol vSphere supports: FC/FCoE, iSCSI and NFS.

There are some limitations.

First, now instead of just copying the memory, we’re also copying all the storage drives in addition to the memory. Each VM could take over 15 minutes to move just 100 GB of storage alone. Multiply that by how many VMs you plan to move. If you’re link is being used for anything else like replication or Voice over IP (VoIP), you’ll get less than the whole link. The biggest limitation: you cannot migrate Raw Device Mappings (RDMs), a common type of vSphere volume for databases, Microsoft Exchange and large (over 2 TB) storage volumes.

While many of these limitations can be accepted, it's not an optimal solution. Next we’ll look at storage solutions to solve the problem in vendor alphabetical order.

Shared Storage Image with EMC VPLEX Metro

VMware KB: vMotion over Distance support with EMC VPLEX Metro |

It is a pure FibreChannel (FC) solution with full fault-tolerant node redundancy at each site. You will need to add FC extension, such as dark fiber: Long-Wave, CWDM, DWDM or FCIP. You only need 2 FC extension links (ISLs) to make the solution work, the smallest number in all the designs and often the most expensive part of the solution. You can use all the FC best-practice designs, such as transit VSANs with Inter-VSAN Routing (IVR) or LSANs for fault domain isolation. You can utilize VMware HA Clusters and DRS.

A third site can be utilized as well with a witness agent running in a VM or server to provide split-brain protection. Perhaps the most important feature that EMC has going is it doesn’t suffer from the storage traffic trombone, as many of the other solutions do, since the volumes are opened locally at each site. We’ll talk more about the traffic trombones and how to overcome them in Long-Distance vMotion: Part 3.

We are deploying this solution with customers today.

Shared Storage Image with IBM SVC

Implementing the IBM System Storage SAN Volume Controller V6.1 |

It can be FC or iSCSI on the front end to the servers, but only FC on the back end. You’ll need FC extension on the front-end, OTV will work for iSCSI, and at least for the front-end you can use all the best practice designs in regards to SANs. On the back-end however, you’re stuck. There can be no ISLs between sites and you’ll need traditional Long Wave FC SFPs to the storage, which is one reason it’s limited to 10 km. The other is beyond 10 km, the cache updates start to bog the nodes down. You are also required to have a third site, with storage there that’s capable of having an enhanced quorum disk for split-brain protection, again with no ISLs, LW SFPs and less than 10 km between all three sites. You will need 2 dark fiber connections for the front end and a minimum of 6 dark fiber connections for the back end. For each additional piece of storage per site in that IO group add an additional 2 pairs of dark fiber per system.

Unlike the EMC VPLEX solution, traffic will trombone to the active node at some point, either at the source or when moved to the destination, due to MPIO (multipath input/output) active/passive drivers sending all traffic to the preferred node.

While I wouldn’t recommend this solution, I do know of at least one customer using it, even with all the limitations mentioned. If fiber is free to you across campus, this might be for you.

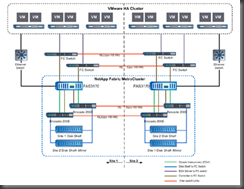

Shared Storage with NetApp Metrocluster or V-Series Metrocluster

A Continuous-Availability Solution for VMware vSphere and NetApp |

NetApp offers the most protocol flexibility with FC/FCoE, NFS or iSCSI on the front end, which can use all the FC best-practice designs, such as transit VSANs with IVR or LSANs for fault domain isolation. On the native non-V-Series solution, the back end requires FC today and you have to use the Brocade switches which are included with and hard coded into the solution. You will use 4 FC connections between sites on this solution. You can utilize VMware HA Clusters and DRS.

There is no automatic split-brain protection, in the case of total network failure (if you skimp on redundant routing diversity) you will have to enter commands at the site you want to survive, bringing those volumes back online, but any one component failure is completely automatic. Like the IBM SVC traffic will trombone to the active node at some point, either at the source or when moved to the destination, due to using MPIO ALUA (asymmetric logical unit access) drivers.

We have designed and deployed NetApp MetroClusters at quite a few customers and people are quite happy with them and perform well.

Shared Storage with NetApp FlexCache

Whitepaper: Workload Mobility Across Data Centers |

FlexCache has a primary/secondary relationship with volumes. All writes pass through a secondary back to their primary volume. If the primary site is down, writes will cache up at the secondary. With a split-brain you can run at the secondary sites. Like the EMC VPLEX there is no traffic trombone, volumes are open locally without having to cross to the second site. The controllers handle all the coordination, locking and coherency.

I find this an intriguing design, but I still don’t think NFS is the protocol that fits all needs. People are deploying NFS more with vSphere and I’ve seen Oracle systems running SAP over NFS with tremendous IO throughput, but not every application supports NFS. It might be good for vSphere alone, but these solutions often need to support more than just vSphere. I think this could mature nicely as NetApp merges the cluster and traditional block (7-mode) versions of Data On Tap going forward.

VMware vSphere Split Clusters

VMware currently restricts us to 622 Mbps bandwidth and a 5 ms RTT latency. Hopefully later versions will relax these limitations enough to go further.

VMware also doesn’t provide the intelligence for designating different sites in a split-cluster configuration so care has to go into how nodes are deployed. If you decide to implement load balancing or fault-tolerance, additional considerations need to be taken to provide adequate bandwidth between sites, and insure that workloads don’t spend a lot of time going between sites unnecessarily.

These considerations can be accomplished with proper planning with a vSphere architect.

Geographic Disaster Recovery

Building out Long-Distance vMotion we need to stay today within synchronous distances of 100 km (or 400 km with FlexCache), but what about geographic protection beyond that for disaster recovery?

We can add back our familiar WAN (or OTV over the WAN), traditional asynchronous storage mirroring and SRM between the LDVM datacenters and our remote disaster recovery site. The LDVM datacenters will appear as a single site with the DR site as it’s target. Now we have non-disruptive business continuity locally, with disaster recovery geographically.

Part 3 will look at the technical problems you still need to overcome once the architecture is built out, where I’d like to see vendors go in the future and my own conclusions.

For updates and addendums to this post, please see Long-Distance vMotion: Updates.

Disclaimer: I am a NetApp Employee

ReplyDeleteFirst of of all Well done on the Blog.

Just wanted to clarify that the NetApp Metrocluster solution can have a Tie-Breaker which can react to a split-brain situation. The tie breaker solution is part of Microsoft SCOM though our On Command Plugin for Microsoft in case the customer is using it or it can be installed on a separate node in case the customer doesn't have SCOM in their environment.

Thanks for the clarification. I was familiar with the SCOM plugin, but didn't know it could play the tie-breaker role.

ReplyDeleteI'll be sure to revisit my current MetroCluster customers, since they're older than the SCOM plugin.